Maia Chaka, First Black Woman to Officiate an NFL Game, Kicks off Women’s History Month

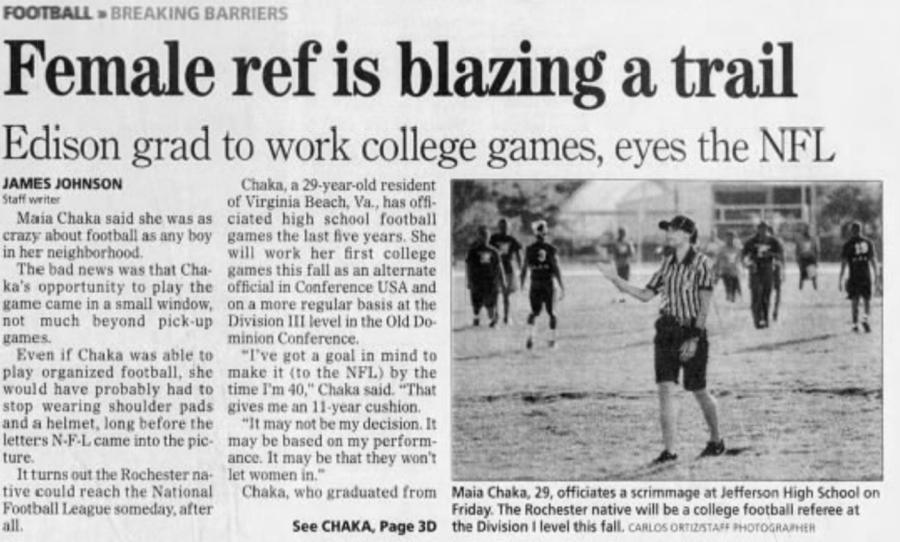

Credit to Democrat and Chronicle

A news article from the Democrat and Chronicle’s ROC Sports section on July 2, 2011, highlights Maia Chaka’s move up to Division I officiating.

On Tuesday, March 1, ODU’s Women and Gender Equity Center (W&GEC) kicked off their Women’s History Month schedule with a keynote event called “Making Her-Story”, which featured Maia Chaka. Chaka is the first Black woman to be hired by the NFL to officiate on-field, as well as the first to officiate an NFL game.

Every year ODU’s W&GEC has a theme for Women’s History Month, and this year the theme is promoting healing and hope.

“This theme is both a tribute to the ceaseless work of caregivers and frontline workers during this ongoing pandemic, and also in recognition of the thousands of ways that women of all cultures have provided both healing and hope throughout history,” said Ericka Harrison-Bey, the assistant director of the Women & Gender Equity Center. “One way with women to embody this theme is to highlight women in sports and leadership, who are exemplifying what it means to provide healing and promote hope through making history.”

Chaka was introduced by ODU womens basketball coach DeLisha Milton-Jones, who is a retired WNBA player and a two-time Olympic gold medalist.

“I want to thank our community for honoring women, not only in sports and leadership, but of all backgrounds, identities, and experiences, and for your support of recognizing and amplifying their achievements, contributions, and representation for women’s history month,” said Milton-Jones. “In [Chaka’s] personal journey, accomplishments, and giving back to her community, her story is one which has inspired hope to many girls and women, illuminating paths to healing.”

Chaka started officiating high school football games in 2007, before moving up to officiate the VHSL championship game in 2009. In 2011, she served as an alternate official with Conference USA and was a referee for Division I football. Chaka jumped from officiating high school to Division I because of help from Gerald Austin, who refereed three Super Bowl games.

“He said ‘there were so many people in that room that didn’t want you there, there were so many people who were waiting for you to fail along the way, I wanted to make sure that you were going to be okay,’” said Chaka. “He surrounded me by a bunch of veteran officials to let me be able to learn. He did that for about three years. And I finally became good.”

Chaka placed emphasis on the help she received from Gerald Austin and Tony Brothers, because they helped to open doors for her that were otherwise closed. She remembers attending an event and being asked whose wife she was, rather than being treated as an attendee.

“It is so important that we have the support of males when we start talking about gender equity,” she said. “To have the support of somebody like that, as a woman, as a minority woman, [to] know that there’s somebody at the top of the game that’s trying to reach back, try to help out the others on the grassroots level, that means so much.”

Chaka joined the Officiating Development Program of the NFL in 2014, and began working regular season for the Pac-12 Conference in 2018. She branched out in 2019, and began officiating NCAA Division I women’s basketball. Chaka was promoted to the NFL as an on-field official in March of 2021, and became the first Black woman to officiate an NFL game when she worked as a line judge for the New York Jets vs Carolina Panthers game on September 12, 2021. Chaka is also the third female referee in professional football history.

“So this moment here, this moment for me, is bigger than the personal accomplishment. It absolutely is,” Chaka said “It’s not about me. I’m just the one that has been given this platform. So I continue to create more avenues, more platforms for accomplished women.”

Chaka is choosing to give back through a nonprofit called Make Meaningful Change (MMC). She also works as a physical education teacher in the Virginia Beach Public School System.

Chaka asked current students from the high school she attended, Edison Technology, to design her logo for MMC.

“I wanted to be able to give them real life work experiences, regardless of gender, regardless of color, regardless of what you look like or what you choose to wear,” she said.

Ultimately, Chaka believes in ‘lowering the ladder’.

“I don’t want to be by myself,” she said. “If we constantly challenge each other, as women, and when we get those leadership positions we create other opportunities for women – not to say that these men aren’t great, but you guys have been in charge for a very long time. We have to create more female leaders. We have to. We need the support from males to lower the ladder.”

She ended the keynote event on a challenge. “Today, tomorrow, next week, if it takes you five years from now, I don’t care. Just as long as you’re able to bring somebody up with you. Bring somebody up along the way.”

Other events by ODU’s W&GEC for Women’s History Month can be found here.

Sydney Haulenbeek is an English major and senior, graduating in May of 2023. Before becoming the Editor in Chief of the Mace & Crown she worked as...